02:13

"How can we use the power of artificial intelligence to help our biological intelligence, if it's affected by disease or injury?"

This is the question that led Aldo Faisal and his team at Imperial College London, UK, to build a technology that will allow people living with paralysis to perform real-world physical actions via a brain computer device controlled by their minds.

Paralysis is often the result of spinal cord injuries, which prevent brain signals from traveling to the rest of the body and making the limbs move.

The idea behind the project is to develop a brain computer interface that will be able to "automatically understand what the brain is thinking and translate that into actions for the brain," as Faisal, head of the research, explains.

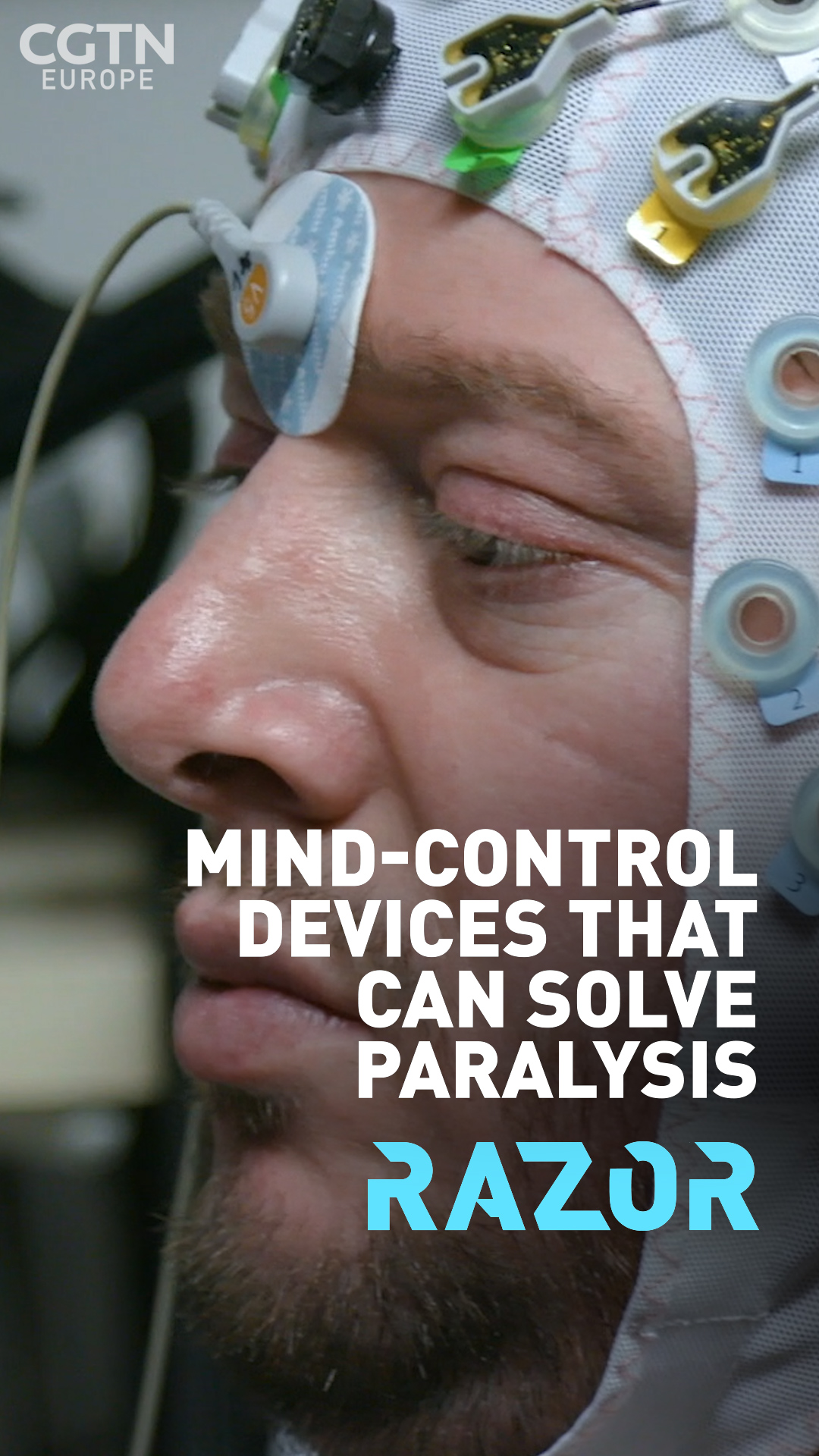

Signals from the brain are picked up from through an electroencephalograph, a technique that requires electrodes to be attached to the scalp. Tom Nabarro has 64 of these electrodes attached to his head when RAZOR speaks to him about the technology.

Nabarro, who lost use of his limbs after a snowboarding accident in 2007, is working with the Imperial College team to train for this year's Cybathlon – the world championship of Robotic Systems at which people with disabilities compete to complete everyday tasks using state-of-the-art technology.

"The idea is that if I'm watching a video of the game, I can practice making the signals I would if I was playing a real game," explains Nabarro. /CGTN

"The idea is that if I'm watching a video of the game, I can practice making the signals I would if I was playing a real game," explains Nabarro. /CGTN

Scientists team up with 'pilots' like Tom, people with real disabilities who test and advance their new technology.

"For me, it's been an amazing experience," says Nabarro. "The event itself has only just started. There's never been anything really like the Cybathlon – people call it Cyber Olympics. And it's something that has only just happened because now we're at a stage where there's so much assistive technology."

During preparation for the Cybathlon, the brain computer interface needs to collect data about brain signals corresponding to certain movements. But the human brain is a very complex system: it's made up of about 86 billion neurons or nerve cells, which are largely responsible for the way we think, feel and act. Each connection sends signals or 'fires' at the average frequency of 4-10 hertz or 4-10 times per second. Some even fire at a rate 300-400Hz.

This is a huge amount of output, so to pick out certain information within a mass of noisy signals is a difficult task. "It's incredibly noisy, to give you a sense, and it would be probably like whispering in a typhoon or something like that," explains research chief Faisal.

Tom Nabarro wearing the electrodes that allow the device to read his motor imageries. /CGTN

Tom Nabarro wearing the electrodes that allow the device to read his motor imageries. /CGTN

Artificial intelligence is able to spot trends and patterns through all this data and filter out the noise, but so that the computer can identify and learn specific instructions, Nabarro needs to imagine repeating certain actions over and over, in exactly the same way. These are called motor imageries.

"You just have to repeat, repeat in imagery," says Nabarro. "So you're just trying to think and concentrate. So I try and clench and release one hand as fast as possible. And that sounds strange because I can't actually move the hand – but I can still visualize doing it."

So far Nabarro has been using the headset at the college once a month. The next step for him and the team at Imperial College is to use a wireless set. "We think this will make it easier for patients to use it on a daily basis, just pop it on their head," explains Faisal.

"We really believe that the way forward is to let algorithms learn how to best understand the data," he says. "That's how we do it ourselves. We learn from experience how to do things better. And that allows us to push the boundaries of what's possible, further ahead.

"The brain is very complex and we're just really starting to scrape off the edges of what we can start decoding. But already we have made a huge leap forward."